Federal

AI Built for Federal Standards—Secure, Scalable, Sovereign.

Air-Gapped-Ready

Deploy and Scale secure AI for Federal missions

Zero External Dependence

No data leaves the network, and there is no third-party access.

Sovereign Control

Full ownership of models, infrastructure, telemetry and data.

Portable Runtime

Same stack, consistent execution everywhere.

Every Environment Covered

On-prem, edge, cloud, or air-gapped.

Cloud Independence

Freedom to deploy without reliance on a single provider.

Mission-Grade Compliance

Meets Data privacy and residency mandates.

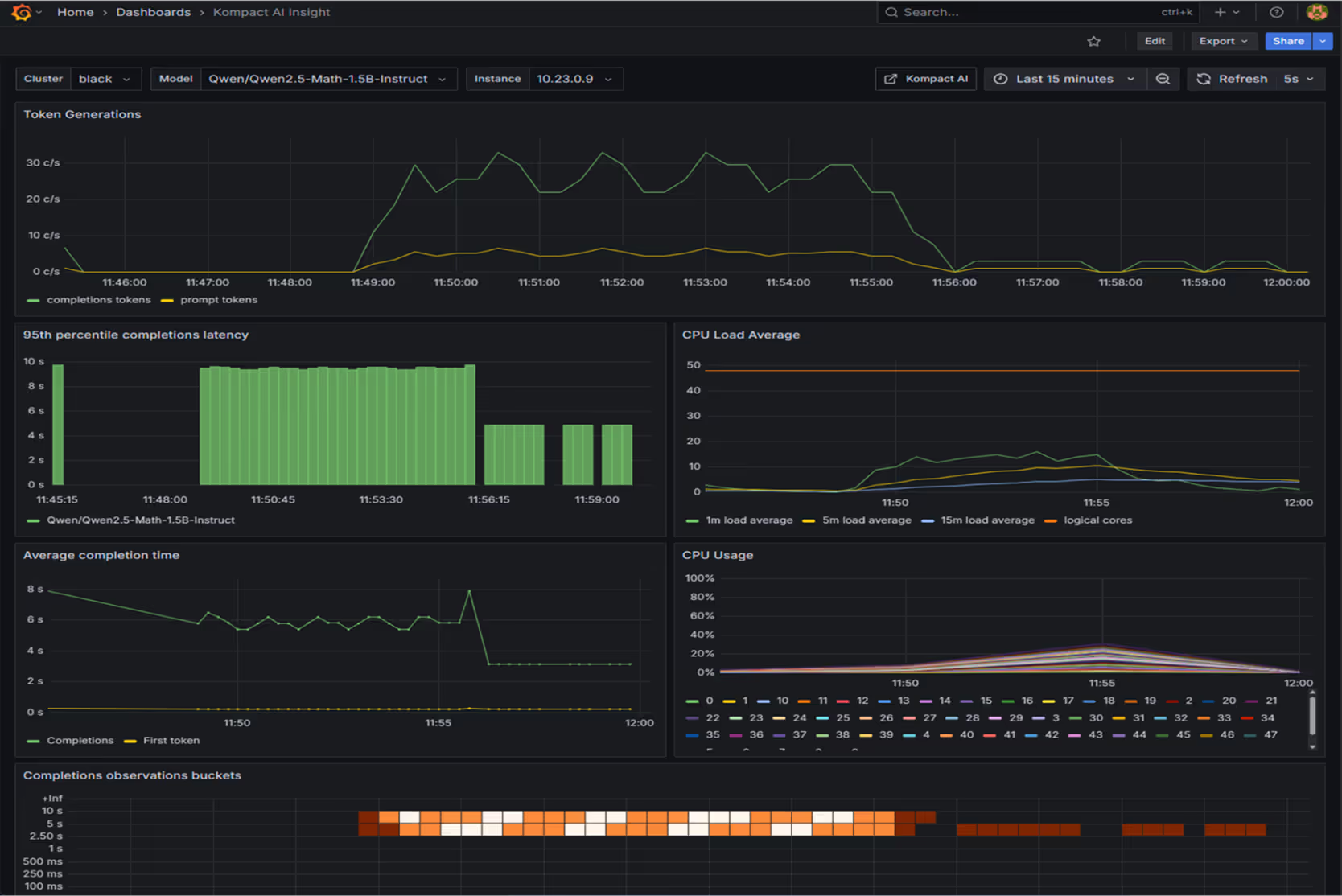

Auditable Operations

Transparent observability for monitoring and reporting.

Data Integrity First

Models execute without exposing sensitive data.

Scale Without Boundaries

Supports seamless horizontal scaling across CPUs, nodes, and on-prem clusters.

Model instances expand by adding cores or machines—no re-architecting required.

Enables linear growth as traffic increases.

Maintains consistent performance, reliability, and cost efficiency at scale.

CPU-Powered AI

No need for GPUs, runs on existing infrastructure.

Predictable Costs

Scale without budget overruns.

Low-Latency Inference

Works Optimised for time-sensitive missions..

Proprietary Models Stay Yours

Your model’s IP and weights remain fully with you.

We provide the Kompact AI SDK—your teams use it to wire up the model securely.

OpenAI- Compatible APIs

Applications interact with Kompact AI–optimised models through OpenAI-compatible APIs, enabling easy integration with minimal or no code changes.