Kompact AI Delivers Value

Predictable AI Economics

- Kompact AI-based inference keeps per-user/API call costs stable.

- More users and queries wouldn't mean exponential cost growth and performance drops

- Serve more demand without eroding unit economics.

Fast Time-to-Value

- Run more experiments without compute bottlenecks.

- Move from prototype to production in weeks.

- Test more ideas, raise success rates, and reduce wasted spend.

Advanced AI Apps and Pipeline Innovation

- Enterprise-grade knowledge assistants with sustainable economics.

- Accelerate time-to-market—enabling faster deployment, agile development.

Scalable

- Run secure, private AI deployments On Premise and On Device, fully under your control.

- Deploy on major cloud platforms like GCP, AWS, Azure with easy portability and no vendor lock-in.

High Availability

- Built-in load balancing distributes requests intelligently, so the system stays reliable at any scale.

- Models run without cold starts, ensuring smooth and uninterrupted responses even under heavy workloads.

- Designed for resilience, eliminating risks of outages during peak usage

Kompact AI Runtime

Optimises token generation throughput and system latency across standard CPU infrastructure without changing model weights.

Flexible Deployment – Runs across cloud, on-premises, or embedded environments.

Architecture-Specific Builds – Each model is compiled for its CPU architecture to maximise throughput.

Scales Across Cores – Supports single-core, multi-core, and NUMA systems seamlessly.

Remote Access,

Built for Control

REST-based server hosted on NGINX for secure, flexible model access.

Supports pluggable modules for custom logic like access control and user restrictions.

Enables enterprise controls such as rate-limiting and token caps per user or team.

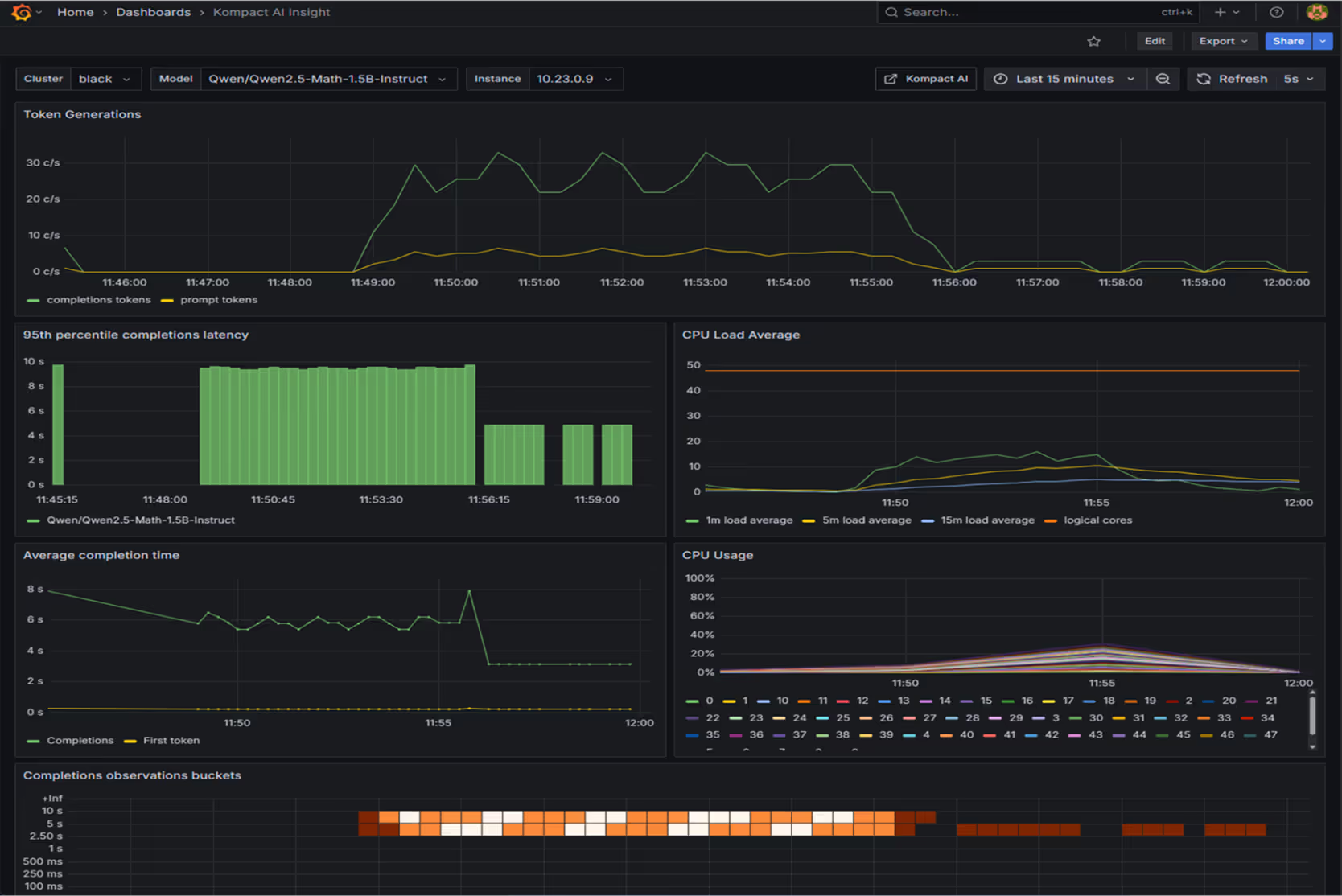

Monitor What Matters with built-in Observability.

Powered by OpenTelemetry for seamless integration with tools like Prometheus and Grafana.

Built-in monitoring for inputs, outputs, SLAs, user requests, CPU, memory, and network usage.

Covers both runtime and REST service, giving enterprises full visibility into model performance.

Flexible Model Access

OpenAI-Compatible APIs

Access models seamlessly with OpenAI-compatible libraries.

Native HTTP Support

Use any HTTP-specific library (e.g., OkHttp in Java) to interact with Kompact AI models.

// Initialize chat client with API key and endpoint

curl -X http://$INSTANCE_IP_ADDRESS/api/v1/chat/completions \ # Base URL Deployment URL

-H "Content-Type: application/json" \

-d '{

"model": "Qwen/Qwen2.5-Math-1.5B-Instruct", #model name

"messages": [

{ "role": "system", "content": "You are a helpful assistant." }, #system instruction

{ "role": "user", "content": "How to get a number 100 by using four sevens (7’s) and a one (1)?" } #user querry

]

}'

from openai import OpenAI

# Initialise the OpenAI client with API key and base URL

client = OpenAI(

base_url="http://34.67.10.255/api/v1", # Base URL / Deployment URL

api_key="pass"

)

# Create a chat completion request

completion = client.chat.completions.create(

model="Qwen/Qwen2.5-Math-1.5B-Instruct", # Model to use

messages=[

{

"role": "system", #System instructions

"content": "You are a helpful assistant."

},

{

"role": "user",

"content": "How do I declare a string variable for a first name in java ?" #user query

}

],

)

print(completion.choices[0].message.content)

package org.example;

import com.openai.client.OpenAIClient;

import com.openai.client.okhttp.OpenAIOkHttpClient;

import com.openai.models.ChatModel;

import com.openai.models.chat.completions.ChatCompletion;

import com.openai.models.chat.completions.ChatCompletionCreateParams;

public class Main {

public static void main(String[] args) {

// Initialize the OpenAI client

OpenAIClient client = OpenAIOkHttpClient.builder()

.apiKey("pass") // Replace with your key or use fromEnv()

.baseUrl("http://34.67.10.255/api/v1") # Base URL / Deployment URL

.build();

// Build chat completion request

ChatCompletionCreateParams params = ChatCompletionCreateParams.builder()

.model("Qwen/Qwen2.5-Math-1.5B-Instruct") #model name

.addSystemMessage("You are a helpful assistant.") #system instructions

.addUserMessage("How to get a number 100 by using four sevens (7’s) and a one (1)?") #user query

.build();

ChatCompletion chatCompletion = client.chat().completions().create(params);

System.out.println(chatCompletion.choices().get(0).message().content());

}

}

package main

import (

"context"

"github.com/openai/openai-go"

"github.com/openai/openai-go/option"

)

func main() {

// Initialize client with API key and endpoint

client := openai.NewClient(

option.WithAPIKey("fake"),

option.WithBaseURL("http://34.67.10.255/api/v1"),

)

//create chat completion request

chatCompletion, err := client.Chat.Completions.New(context.TODO(), openai.ChatCompletionNewParams{

Messages: []openai.ChatCompletionMessageParamUnion{

openai.SystemMessage("You are a helpful assistant."), #system instruction

openai.UserMessage("How do I declare a string variable for a first name in javascript ?"), #user query

},

Model: "Qwen/Qwen2.5-Math-1.5B-Instruct", #model name

})

if err != nil {

panic(err.Error())

}

println(chatCompletion.Choices[0].Message.Content)

}

import OpenAI from 'openai';

// Initialize client with API key and base URL

const client = new OpenAI({

apiKey:"fake",

baseURL:'http://34.67.10.255/api/v1', # Base URL / Deployment URL

});

const completion = await client.chat.completions.create({

model: 'Qwen/Qwen2.5-Math-1.5B-Instruct', #model name

messages: [

{

"role": "system",

"content": "You are a helpful assistant." #system instruction

},

{

"role": "user",

"content": "How do I declare a string variable for a first name in javascript ?" #user querry

}

],

});

console.log(completion.choices[0].message.content);

using OpenAI.Chat;

using OpenAI;

using System.ClientModel;

// Initialize chat client with API key and endpoint

ChatClient client = new(

model: "Qwen/Qwen2.5-Math-1.5B-Instruct", #model name

credential: new ApiKeyCredential("fake"),

options: new OpenAIClientOptions()

{

Endpoint = new Uri("http://34.67.10.255/api/v1") # Base URL / Deployment URL

}

);

List<ChatMessage> messages =

[

new SystemChatMessage("You are a helpful assistant."), #system instruction

new UserChatMessage("How do I declare a string variable for a first name in javascript ?") #user query

];

ChatCompletion completion = client.CompleteChat(messages);

Console.WriteLine($"[ASSISTANT]: {completion.Content[0].Text}");

Bring Your Own Models

01

No Trade-Offs

Run custom models on CPUs with full fidelity.

02

IP Control

Avoid vendor lock-in while retaining complete ownership of proprietary models.

03

Cost-Effective Scaling

Deploy and scale enterprise models on CPUs without compromising performance or accuracy.